DavorLauc_ReasoningInexactTemporalExpressions - Dr. Davor Lauc - University of Hamburg

- Lecture2Go

- Catalog

- F.6 - Mathematik, Informatik, Naturwissenschaften

- Sonstiges

- Modelling Vagueness and Uncertainty in Digital Humanities

Catalog

DavorLauc_ReasoningInexactTemporalExpressions

Davor Lauc, University of Zagreb : „Reasoning about inexact temporal expressions using fuzzy logic and deep learning“

The problem of temporal reasoning is known since antiquity. The contemporary-relevant logical analysis began in the second half of the twentieth century when Arthur Prior developed temporal logic as a variant of modal logic. The alternative approach, based on the use of classical predicate logic, was devised by philosopher Donald Davidson. Davidson's proposal was an inspiration to the calculus of events as well as the interval algebra developed by James Allen. To this day, many other approaches to display and reasoning in this domain have been developed, such as temporal databases, temporal logical programming and many others.

All these approaches represent the temporal determinants either as a point in the timeline or as a uniform interval. Many temporal expressions occurring in a range of social sciences and humanities, particularly history, as well as in everyday reasoning, cannot be adequately represented in this way. For example, if something happened during the Industrial Revolution, it's possible that it was 1745, but less likely than in 1801. Also, if we have the exact record that a person became the mother of 1848, she could be born any day from 1790-1839, but is more likely to be born 1828 than 1800. The second level of uncertainty brings the credibility or reliability of the source. For example, if you are verifying whether two events are concurrent in two historical references, and there is no exact overlap, we will be inclined to choose an event that occurred in the temporal vicinity as more likely, given the possibility of error in the source.

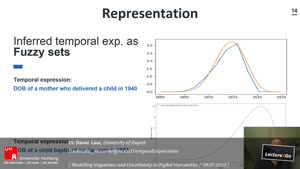

In this research, we are investigating possibilities of representing the semantics of inexact temporal expressions using fuzzy sets. For the purpose of empirical validation of the reasoning models, the first part of the research includes developing a NER system for recognising inexact dates in the text. Although there are well-developed models for identifying temporal expressions such as SuTime and HeidelTime, their support for vague temporal expression is limited. The developed transformer-based model is applied to the English Wikipedia with satisfactory f-score. The second part of the research is the development of a neural model for the generation of a fuzzy set representing the meaning of temporal expression. As fuzzy sets rendering temporal data with the granularity of dates, they are huge. A model for dimensionality reduction is developed to facilitate efficient storage and manipulation of those sets. The third part of the research is the development of different formal models for relations and operation on the inexact dates. The fundamental and the most challenging relationship is that of similarity between two imprecise dates. The relationship is modelled using fuzzy logic t-norm operation with parameters learned from empirical data. The fourth part of the research includes logical and philosophical analysis of the problem of similarity in the context of the temporal reasoning.

---

https://www.inf.uni-hamburg.de/inst/dmp/hercore/publications/vaguenessuncertainty2020.html

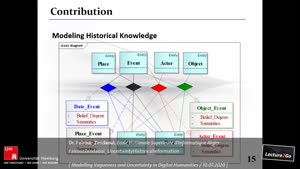

Digital Humanities (DH) aims not only to archive and make available materials (in particular historical artefacts) but also to introduce a better scientific reflexion into humanities by propagating computational methods. However more than ten years of consequent employment of computer-aided research did not lead to a hermeneutic-adequate digital modelling of historical objects. The main crux remains in most DH-attempts the storage of objects in database architectures designed for natural science application, the annotation with very general metadata, the mark-up with shallow linguistic information no after the language or the purpose of the document and the quantitative analysis. Not only images and texts become artificially precise, but the mutual illumination of texts and other media loses its traditional hermeneutic power.

Vagueness is one of the most important, most significant but most difficult features of historical objects, especially texts and images. Whereas ambiguity – several distinct but clear meanings- and uncertainty – conceptually clear but unknown or forgotten data - are relatively well describable phenomena, vagueness is undefined by semantics or pragmatics.

This workshop aims at bringing together for the first time experts in representation of vagueness and uncertainty and scholars from DH who went beyond state-of the art in their research and tried to apply existent theories like fuzzy logic in their work.